Partner integrations at a fintech company never sleep. When your payment provider sends a webhook at 3 AM and your system misses it, someone loses money. At Unbanked, we had partners expecting real-time updates through sockets. We had internal services that needed to react to external events. And we had a load balancer sitting in the middle, breaking everything.

The symptoms were familiar. Socket connections dropped randomly. Events arrived late or not at all. Redis memory usage spiked during peak hours. The alerting was an afterthought—we’d find out about failures when partners complained.

Something had to change. The architecture needed a complete rethink.

What We Built

The project delivered three interconnected systems. First, a webhook subsystem that could receive, validate, and process incoming partner notifications reliably. Second, a socket strategy that maintained persistent connections with partners despite the load balancer. Third, an alerting subsystem that told us about failures before our partners did.

Each piece solved a specific problem. Together, they transformed how Unbanked communicated with the outside world.

The Load Balancer Problem

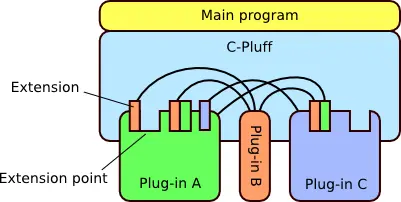

Load balancers and WebSockets have a complicated relationship. The balancer distributes connections across multiple server instances. But a socket connection is stateful. Client A connects to Server 1. When Client A sends a message, it needs to reach Server 1 specifically.

Our setup used sticky sessions based on IP addresses. This worked until it didn’t. Mobile clients changed IPs. Corporate networks rotated addresses. Connections landed on the wrong server.

The fix required Socket.IO’s adapter pattern with Redis as the backbone. Every server instance published events to Redis. Every instance subscribed to relevant channels. When a message needed to reach a specific client, it didn’t matter which server handled the original request. Redis broadcast it to all servers. The server holding that connection delivered it.

This pattern also gave us horizontal scaling. Add more servers behind the load balancer. They join the Redis pub/sub automatically. No configuration changes needed.

Event Processing with Bull Jobs

Raw webhooks are unpredictable. A partner might send one event per second or a thousand. Your processing logic might take milliseconds or minutes. Without a buffer, you’re either dropping events or choking your servers.

Bull queues gave us that buffer. Incoming webhooks hit a thin endpoint. We validated the signature, acknowledged receipt, and pushed the payload into a queue. The partner got their 200 OK response in milliseconds.

Behind the queue, workers processed events at a controlled pace. We defined priorities—payment confirmations ranked higher than balance updates. Failed jobs went to a retry queue with exponential backoff. After too many failures, they landed in a dead letter queue for manual review.

The queue also solved the ordering problem. Some events depended on others. A refund event means nothing if the original payment event hasn’t processed yet. Bull’s job dependencies let us chain related events together.

Redis Connection Management

Redis powered both the socket adapter and the job queues. That meant lots of connections. Each worker needed its own connection. Each server instance needed connections for pub/sub. The numbers added up fast.

We hit Redis connection limits during a traffic spike. The server logged errors. Events stopped flowing. Everything looked healthy on the surface until you checked Redis.

The solution was connection pooling with aggressive reuse. We created shared connection pools for common operations. Read operations shared one pool. Write operations shared another. The socket adapter got its dedicated connections since pub/sub requires persistent subscriptions.

We also tuned the connection settings. Shorter idle timeouts freed up unused connections faster. Connection health checks caught zombie connections before they caused problems. Monitoring dashboards showed connection counts alongside the usual metrics.

The Alerting Subsystem

Knowing about failures after the fact isn’t good enough. The alerting system watched for specific patterns. A webhook that failed three times in a row. A socket connection that dropped and couldn’t reconnect. A queue that grew faster than workers could process it.

Each alert type had its own severity and notification channel. Payment failures went to Slack and PagerDuty. Queue backlogs started with Slack and escalated if they persisted. Connection issues logged to our monitoring system first.

The alerting code lived alongside the features it monitored. When we added a new webhook type, we added its alerts in the same pull request. This kept the coverage complete without requiring a separate alerting team.

Results

Partner complaints about missed events dropped to near zero. We could trace any webhook from receipt to final processing. The socket connections stayed stable even during deployments. Redis memory usage became predictable.

The architecture also made future work easier. Adding a new partner meant defining their webhook format and queue priority. The infrastructure handled the rest.

Backend architecture work rarely makes headlines. But it determines whether your product works at scale. If you’re dealing with similar challenges—unreliable integrations, unpredictable load, or systems that fail silently—I’d be happy to discuss approaches.