Playing snake is easy for humans. We look ahead, spot the food, avoid walls and our own tail. But teaching a computer to do this without explicit rules? That is a different challenge.

I wanted to see if snakes could learn to survive on their own. No hard-coded pathfinding. No preprogrammed strategies. Just evolution. The fittest survive and pass on their traits. The rest die off.

This project became my experiment in genetic algorithms and neural networks. Built entirely in vanilla JavaScript, running right in the browser.

What It Does

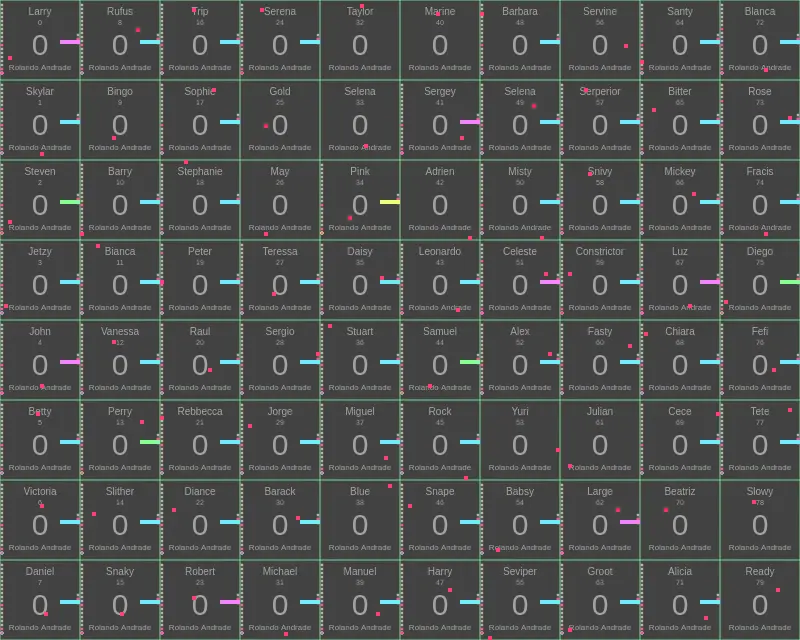

The screen displays 80 snakes playing simultaneously. Each snake has its own brain. A small neural network that decides whether to turn left, go straight, or turn right.

At first, the snakes move randomly. Most crash into walls or their own bodies within seconds. But some survive longer than others. Maybe they got lucky. Maybe their random brain weights happened to help them avoid obstacles.

After all snakes die, the next generation begins. The survivors pass their neural network weights to their offspring. Some weights get mixed between parents. Some get randomly mutated. Over many generations, the snakes get better at staying alive.

The Neural Network

Each snake has a neural network with four layers. The input layer takes 22 values describing the snake’s situation. The output layer produces three values: turn left, go straight, or turn right.

The 22 inputs capture everything the snake needs to know:

- Eight directions showing where the food is (up, down, left, right, and four diagonals)

- Eight directions showing if obstacles are in the way

- Two values indicating if the snake is moving toward the food

- Four distances to each wall

The network processes these inputs through two hidden layers with 18 and 10 neurons. The hidden layers use sigmoid activation. The output layer uses ReLU. The snake picks whichever output has the highest value.

Genetic Algorithm

The population has 80 snakes. When a generation ends, the algorithm sorts snakes by their fitness score. Better snakes score higher.

Fitness comes from a few factors. Moving toward food adds points. Moving away subtracts points. Eating food gives a big bonus. Hitting walls or yourself gives penalties. There is also a timer that kills snakes who wander too long without eating.

The top 15% of snakes survive to the next generation. They become parents. The algorithm creates babies by combining weights from two parents. Each weight has a 50% chance of coming from either parent.

After crossover, there is a 30% chance of mutation. Mutation adds small random changes to all weights in the network. This introduces variation and helps escape local optima.

The Architecture

The project runs entirely in the browser using HTML canvas. No libraries. No frameworks. Just vanilla JavaScript.

The code splits into several modules:

- Shapes - Drawing rectangles and circles on canvas

- Brain - Neural network with layers, weights, and forward propagation

- Snake - Movement logic and collision detection

- Generations - Selection, crossover, and mutation

- Game - Main loop and board management

Each snake lives on its own 80x80 pixel board. The main view shows 10 columns and 8 rows of these mini-boards. This lets you watch 80 snakes evolve at once.

How the Brain Works

The neural network is built from scratch. No TensorFlow or other libraries. Just matrix multiplication and activation functions.

A layer stores its weights as a 2D array and biases as a 1D array. Forward propagation multiplies the input by the weights, adds the biases, then applies the activation function.

The sigmoid function squashes values between 0 and 1. Good for the hidden layers where we want smooth gradients. The ReLU function returns the input if positive, zero otherwise. Good for the output layer where we just need to pick the highest value.

Watch It Learn

Open the page and watch the first generation. The snakes move randomly, crashing almost immediately. Check again at generation 10. Some snakes now avoid walls consistently.

By generation 50, interesting behaviors emerge. Snakes circle near the edges. They track food more reliably. Some develop a habit of moving in loops until food appears nearby.

The learning is not perfect. Sometimes a good strategy spreads through the population, then gets lost to mutation. Sometimes the snakes get stuck in local optima. They find a mediocre strategy and never discover better ones.

Challenges

Getting the fitness function right took several tries. My first version only rewarded eating food. Snakes would wander randomly until they stumbled onto food by accident. No learning happened.

Adding rewards for moving toward food changed everything. Now closer snakes scored higher even if they died before eating. This gave the genetic algorithm meaningful gradients to follow.

The mutation rate also needed tuning. Too low and the population converged on mediocre solutions. Too high and good strategies got destroyed before they could spread.

What I Learned

Genetic algorithms are simple to implement but hard to tune. Small changes in fitness function or mutation rate lead to very different outcomes.

Neural networks do not need to be deep to solve simple problems. A network with 22 inputs, two hidden layers, and 3 outputs was enough for basic snake gameplay.

Running 80 parallel simulations made the evolution visible. You could literally watch better strategies emerge and spread through the population.

The project also taught me that evolution has no foresight. The snakes never learned to plan ahead. They react to their current situation. Sometimes that is enough. Sometimes they trap themselves in corners.