Artificial Intelligence and Machine Learning Professor

Andrés Bello Catholic University

I taught about the fundamentals of artificial intelligence and machine learning, neural networks, convolutional neural networks, recurrent neural networks, generative adversarial networks, and reinforcement learning.

Teaching AI and Machine Learning

In 2024, everyone was talking about ChatGPT and AI, but most people didn’t understand how it worked.

I taught AI and Machine Learning at Andrés Bello Catholic University for eight months. My goal was simple: teach students how AI actually works, not just how to use it. Instead of just calling APIs, we built things from scratch so they could understand what’s happening under the hood.

Starting with Basic Machine Learning

We started with a simple question: how does a machine know if a background is light or dark?

Instead of just explaining it, we built ChromAI. We used Logistic Regression to teach a computer to detect contrast. It was simple, but it helped students understand the basics.

Then a student asked how Netflix recommends shows. That led to ScreenBuddy, where we used KNeighbors to build a recommendation system. Students learned how computers can find patterns in what people like.

Building Neural Networks

Once students got comfortable, they wanted more. One student said he wanted to build a neural network from scratch, not just use a library.

So we did. We rebuilt AlexNet, a famous computer vision model. We wrote each layer of the network by hand. It failed a lot at first, but after adjusting the settings, it learned to identify flowers. When training took too long, we learned about Transfer Learning using MobileNetV2, which saved us a lot of time.

After that, we tried more advanced projects. We used the Segment Anything Model (SAM) to build RemoveBG, which removes backgrounds from images automatically. We also used Autoencoders to make low-quality images look better by filling in missing details.

Working with Audio and Time-Based Data

Next, we worked on audio. We built Denoiser using SepFormer, which removes background noise from audio and makes speech clearer.

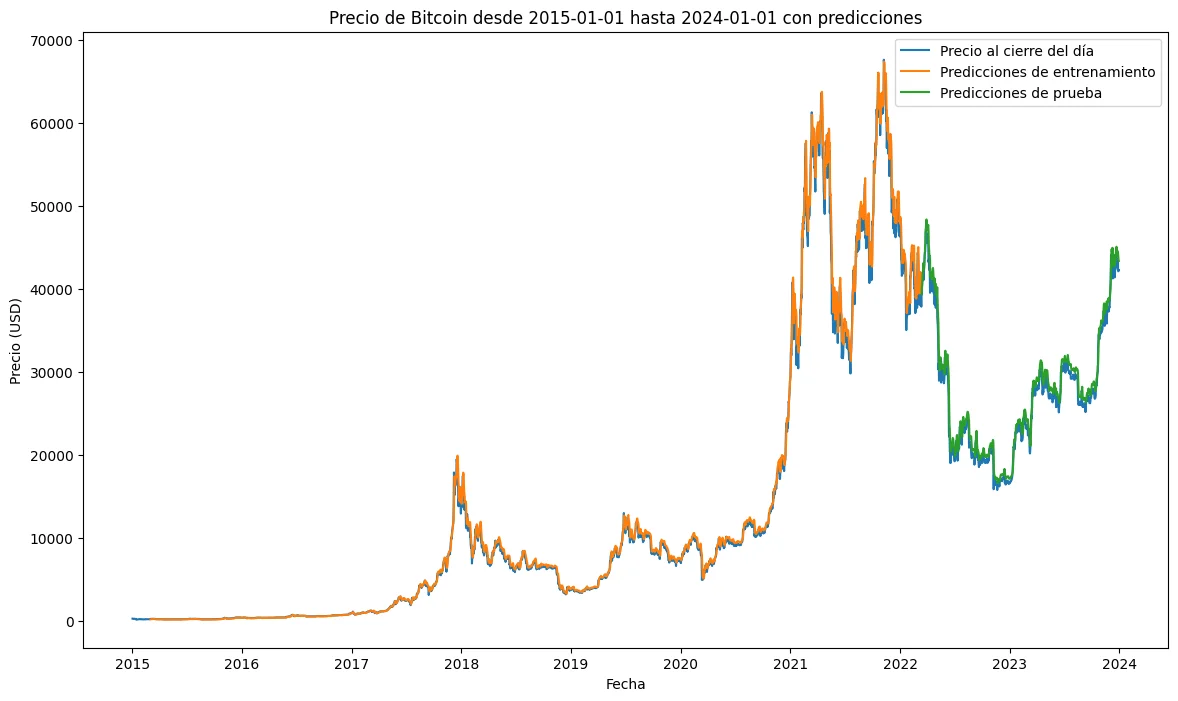

Then students wanted to try predicting Bitcoin prices. We used Recurrent Neural Networks (RNNs, LSTMs, and GRUs) with data from Yahoo Finance. One student’s model predicted Bitcoin would hit $72,000, and it actually happened.

Advanced Projects

Near the end of the semester, students wanted to try more complex things. One asked about building an AI that could have conversations.

We built AIFriend by combining Text-to-Speech (Coqui-TTS) and Speech-to-Text technology. It could have voice conversations in real-time, which showed students how modern AI assistants work.

We also built a Transformer from scratch for translating Spanish to English. Students coded the Self-Attention mechanism themselves, which helped them understand how modern language models work.

For our final project, we used Reinforcement Learning to train an AI to land a lunar rover. It crashed hundreds of times, but eventually learned how to land safely.

What Students Learned

The best part wasn’t just the projects. It was seeing students gain confidence.

At the start, many were scared of terms like “Neural Network.” By the end, they were discussing which activation functions work best and why.

The course gave me a rating of 5.91 out of 6.0. But what mattered more was that students got internships and jobs because they could show recruiters real projects they built by them. They could explain how attention mechanisms work because they coded them themselves.

Here are some of the projects developed during the course:

ChromAI

Class 1. ChromeAI is an AI that determines the optimal font color (light or dark) based on the background color, improving readability in web interfaces. It uses machine learning techniques to predict whether text should be white or black based on the provided background color.

ScreenBuddy

Class 2. ScreenBuddy is an artificial intelligence app that recommends movies based on the preferences of other users with similar likes. The project combines theory and practice in recommendation systems, machine learning, and data analysis.

Identity

Class 3. Identity is an artificial intelligence project that implements a facial unlocking system. Given a person's image, the model is able to identify who it is and, if appropriate, unlock the device. This approach seeks to simplify device access, avoiding traditional methods such as PINs or patterns.

Alexnet

Class 4. This project focuses on developing an artificial intelligence capable of identifying elements in images. Through a series of practical implementations, different convolutional neural network (CNN) architectures are explored.

RemoveBG

Class 5. This project aims to develop an Artificial Intelligence model capable of automatically removing backgrounds from images.

Upscaling

Class 6. This repository contains a Jupyter Notebook that demonstrates how to use pre-trained AI models Hugging Face and Replicate to perform image super-resolution. The goal is to upsample a small image (e.g., 96x96) to a larger one (e.g., 512x512) by improving its quality, rather than simply scaling the pixels.

Denoiser

Class 7. This project is the result of a class on audio processing. The main objective is to develop an artificial intelligence capable of removing background noise from an audio recording.

Bitcoin price predictions

Class 8. This project uses a recurrent neural network (RNN) to analyze the price of Bitcoin and predict its fluctuations.

Spanish to English Translator

Class 9. This project implements an artificial intelligence model to translate text from Spanish to English, using Natural Language Processing (NLP) techniques and a transformer. It also includes a web interface for interacting with the translator.

Usage of third party models

Class 10. This repository contains a Jupyter Notebook tutorial that demonstrates how to deploy and use third-party AI models using tools such as Cloudflare AI Workers or Azure ML Studio.

AIFriend

Class 11. AIFriend is an artificial intelligence voice-to-voice designed to converse with you. You can speak to it and it will respond in a human voice, simulating a conversation with a friend. This project was born as a solution to the growing lack of social interaction in an increasingly digital world.

Lunar Rover Landing

Class 12. This project focuses on training a reinforcement learning agent to land a rover on the moon safely and efficiently.

Explore More Experiences

Software Engineer

SignatureAPI

I am responsible of leading the development of Signature API, a platform that provides a developer-focused, API-first platform for adding electronic signature capabilities to your applications and workflows.

Programming Fundamentals Professor

Andrés Bello Catholic University

I was responsible of equipping students with the foundational knowledge and skills necessary for success in software development and computer science.

Lead Software Engineer

Unbanked

I was responsible for leading the integration of multiple debit and prepaid card services issued with white label partners who wanted to launch a card that could use their token for payments. Accomplishments include plug-in system implementation, team management, and enhancements to internal system architectures.